ENHANCING MULTILINGUAL TTS WITH VOICE CONVERSION BASED DATA AUGMENTATION AND POSTERIOR EMBEDDING

Hyun-Wook Yoon, Jin-Seob Kim, Ryuichi Yamamoto, Ryo Terashima, Chan-Ho Song, Jae-Min Kim, Eunwoo Song

Abstract

This paper proposes a multilingual, multi-speaker (MM) TTS system by using a voice conversion (VC)-based data augmentation method. Creating an MM-TTS model is challenging, owing to the difficulties of collecting polyglot data from multiple speakers. To address this problem, we adopt a cross-lingual, multi-speaker (CM) VC model trained with multiple speakers’ monolingual databases. As this model effectively transfers acoustic attributes while retaining the content information, it is possible to generate each speaker’s polyglot corpora. Subsequently, we design the MM-TTS model with variational autoencoder (VAE)-based posterior embeddings. It is to be noted that incorporating VC-augmented polyglot corpora into the TTS training process might degrade synthetic quality, since the corpora sometimes contain unwanted artifacts. To mitigate this issue, the VAE is trained to capture the acoustic dissimilarity between the recorded and VC-augmented datasets. Through the selective choice of the posterior embeddings obtained from the original recordings in the training set, the proposed model enables the generation of acoustically clearer voices.

Figures

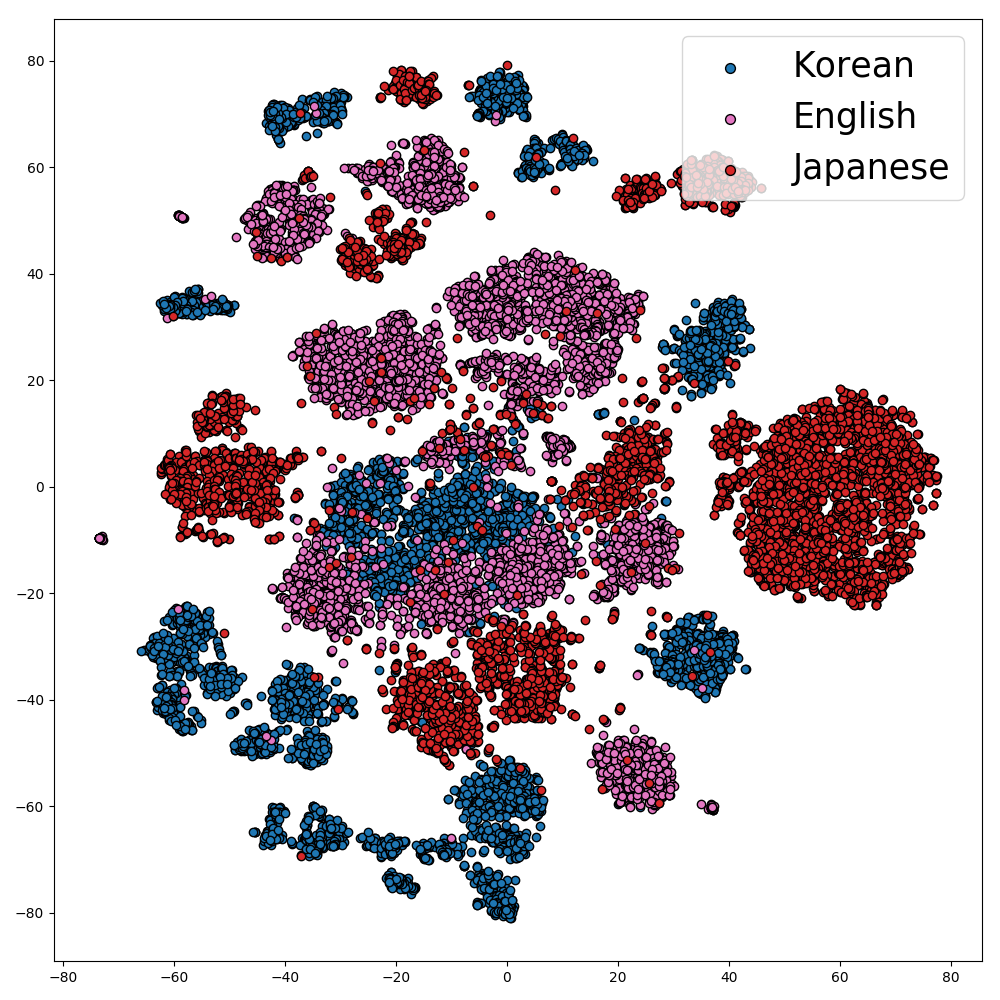

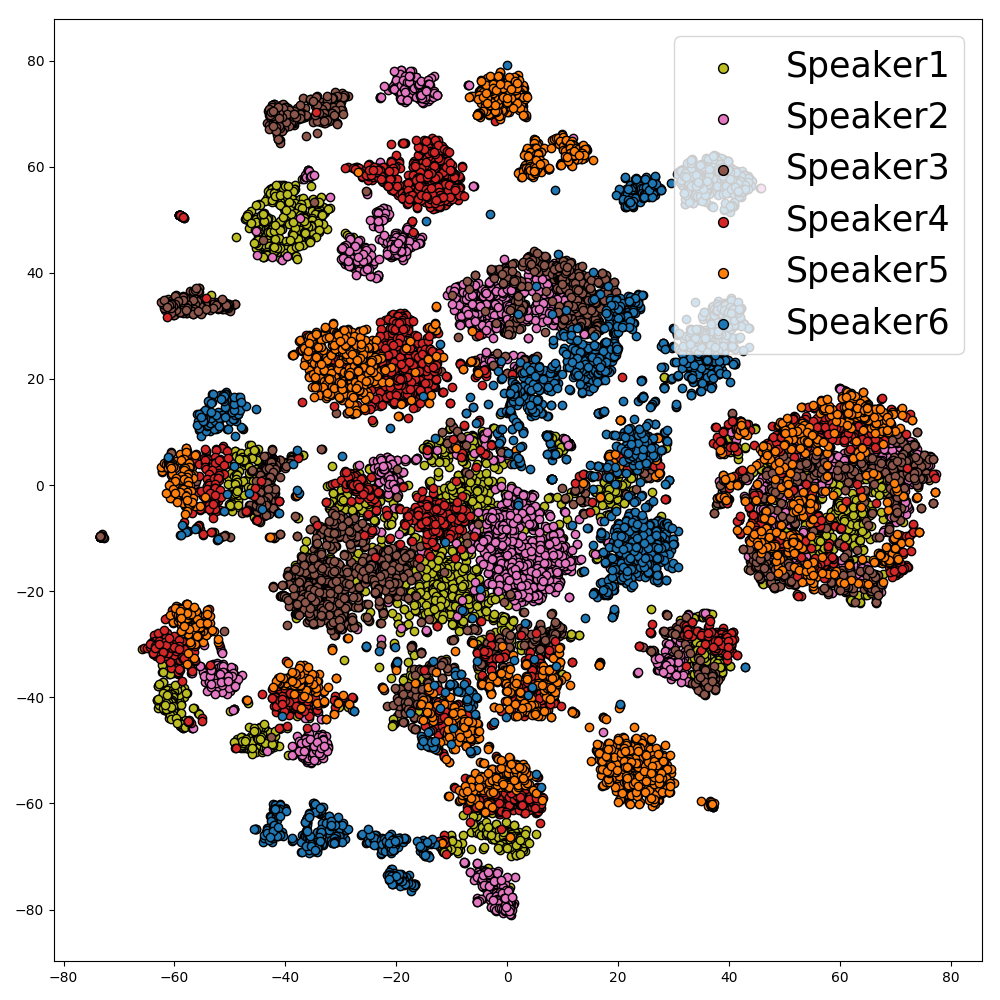

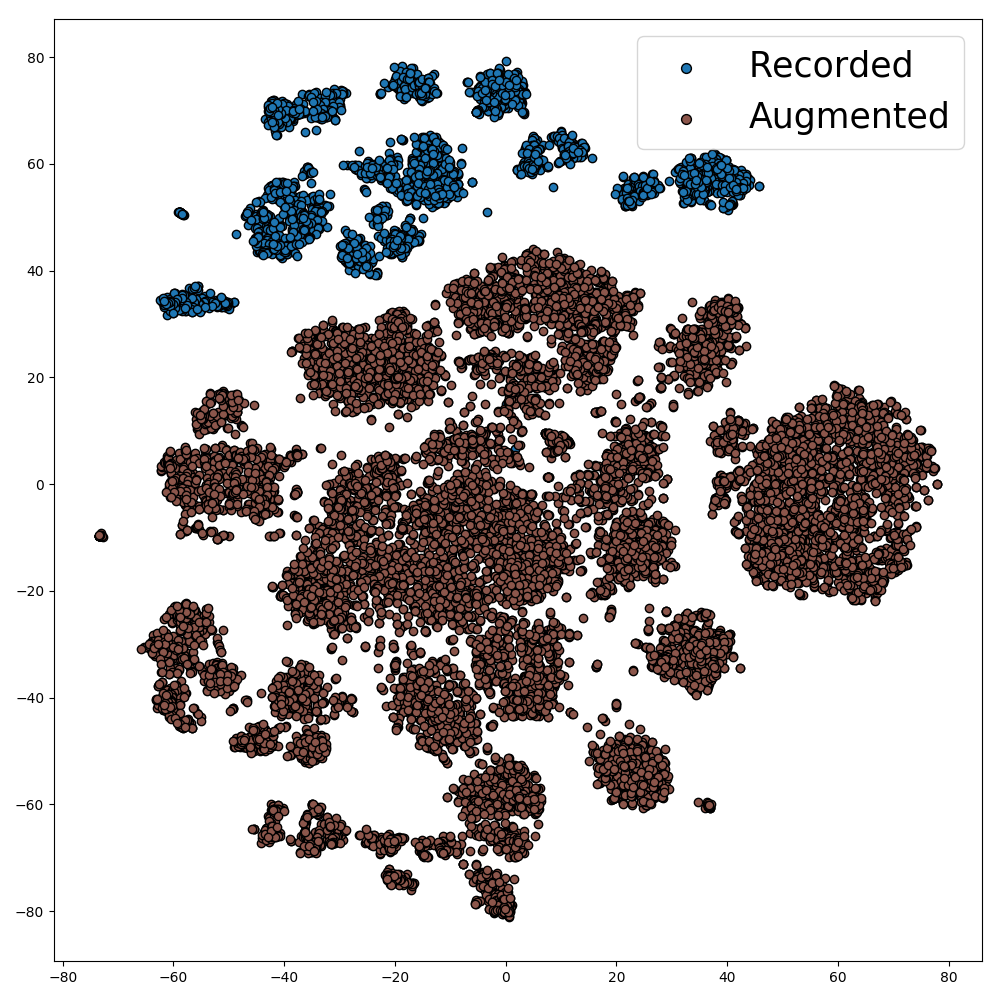

In this paper, we incorporated the VAE structure into the learning process and confirmed that it is possible to distinguish between the characteristics of real (recorded) audio and augmented (voice converted) audio. By using only the characteristics of real audio for inference, we were able to synthesize higher quality audio than simply using an augmented database. The following represents the embeddings in the latent space of the learned VAE, differentiated by each characteristic. Each point signifies a posterior embedding extracted from the audio, and is visualized with different colors or shapes based on aspects like language, speaker, or the presence of voice conversion (VC). As seen in the figure, it was evident that the boundaries were more distinctly defined from the perspective of VC presence compared to other characteristics.

Demo

1. Female Speakers

First language: English

| Language \ System | CM-TTS | MM-TTS | MM-TTS\( \mathrm{_{vae}} \) | GT |

|---|---|---|---|---|

| English (Spoken) | ||||

| Korean (Unspoken) | N/A | |||

| Japanese (Unspoken) | N/A |

First language: Korean

| Language \ System | CM-TTS | MM-TTS | MM-TTS\( \mathrm{_{vae}} \) | GT |

|---|---|---|---|---|

| Korean (Spoken) | ||||

| Japanese (Unspoken) | N/A | |||

| English (Unspoken) | N/A |

First language: Japanese

| Language \ System | CM-TTS | MM-TTS | MM-TTS\( \mathrm{_{vae}} \) | GT |

|---|---|---|---|---|

| Japanese (Spoken) | ||||

| English (Unspoken) | N/A | |||

| Korean (Unspoken) | N/A |

2. Male Speakers

First language: English

| Language \ System | CM-TTS | MM-TTS | MM-TTS\( \mathrm{_{vae}} \) | GT |

|---|---|---|---|---|

| English (Spoken) | ||||

| Korean (Unspoken) | N/A | |||

| Japanese (Unspoken) | N/A |

First language: Korean

| Language \ System | CM-TTS | MM-TTS | MM-TTS\( \mathrm{_{vae}} \) | GT |

|---|---|---|---|---|

| Korean (Spoken) | ||||

| Japanese (Unspoken) | N/A | |||

| English (Unspoken) | N/A |

First language: Japanese

| Language \ System | CM-TTS | MM-TTS | MM-TTS\( \mathrm{_{vae}} \) | GT |

|---|---|---|---|---|

| Japanese (Spoken) | ||||

| English (Unspoken) | N/A | |||

| Korean (Unspoken) | N/A |